1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

|

setwd("D:/data/ML2019/")

library(data.table)

library(wordcloud)

library(RColorBrewer)

library(tm)

library(magrittr)

library(e1071)

library(caret)

sms_raw <- fread("SMSSpamCollection", header = FALSE, encoding = "Latin-1",sep = "\t", quote="")

colnames(sms_raw)<-c("type", "text")

sms_raw$type <- factor(sms_raw$type)

table(sms_raw[, type])

table(sms_raw[, type]) %>% prop.table()

pal <- brewer.pal(7, "Dark2")

sms_raw[type == "spam", text] %>%

wordcloud(min.freq = 20,

random.order = FALSE, colors = pal

)

sms_raw[type == "ham", text] %>%

wordcloud(min.freq = 70,

random.order = FALSE, colors = pal

)

train_index <- createDataPartition(sms_raw$type, p = 0.75, list = FALSE)

sms_raw_train <- sms_raw[train_index, ]

sms_raw_test <- sms_raw[-train_index, ]

dim(sms_raw_train)

dim(sms_raw_test)

dim(sms_raw_train)[1]/dim(sms_raw_test)[1]

table(sms_raw_train[, type]) %>% prop.table()

table(sms_raw_test[, type]) %>% prop.table()

corpus <- function(x) VectorSource(x) %>% VCorpus(readerControl = list(reader = readPlain))

clean <- function(x) {

x %>%

tm_map(content_transformer(tolower)) %>%

tm_map(content_transformer(removeNumbers)) %>%

tm_map(content_transformer(removeWords), stopwords()) %>%

tm_map(content_transformer(removePunctuation)) %>%

tm_map(content_transformer(stripWhitespace))

}

sms_corpus_train <- corpus(sms_raw_train[, text]) %>% clean

sms_corpus_test <- corpus(sms_raw_test[, text]) %>% clean

sms_raw_train[1, text]

inspect(sms_corpus_train[[1]])

sms_raw_test[1, text]

inspect(sms_corpus_test[[1]])

sms_dtm_train_all <- DocumentTermMatrix(sms_corpus_train)

sms_dict <- findFreqTerms(sms_dtm_train_all, 5)

sms_dtm_train <- DocumentTermMatrix(

sms_corpus_train, control = list(dictionary = sms_dict)

)

sms_dtm_test <- DocumentTermMatrix(

sms_corpus_test, control = list(dictionary = sms_dict)

)

convert_counts <- function(x) {

x <- ifelse(x > 0, "Yes", "No")

}

sms_train <- sms_dtm_train %>%

apply(MARGIN = 2, convert_counts)

sms_test <- sms_dtm_test %>%

apply(MARGIN = 2, convert_counts)

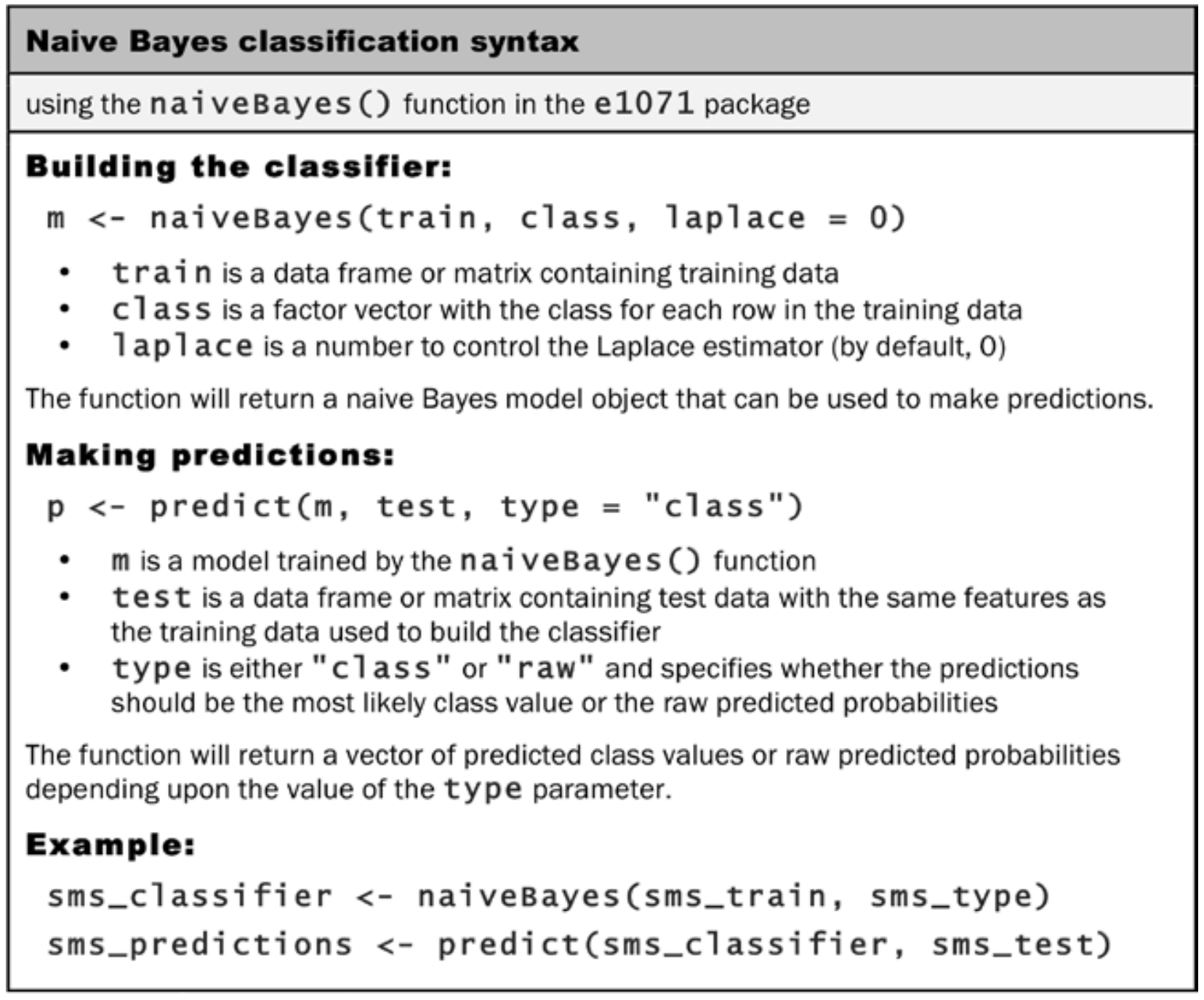

sms_classifier_1 <- naiveBayes(sms_train, sms_raw_train$type)

sms_test_pred_1 <- predict(sms_classifier_1, sms_test)

install.packages("gmodels")

library(gmodels)

CrossTable(x = sms_raw_test$type, y = sms_test_pred_1, prop.chisq=FALSE)

mean(sms_test_pred_1==sms_raw_test$type)

sms_classifier_2 <- naiveBayes(sms_train, sms_raw_train$type, laplace = 1)

sms_test_pred_2 <- predict(sms_classifier_2, sms_test)

CrossTable(x = sms_raw_test$type, y = sms_test_pred_2, prop.chisq = FALSE)

mean(sms_test_pred_2==sms_raw_test$type)

|